Agents Standing By: Introduction to Huginn

What is Huginn?⌗

Have you ever felt out of the loop on current events? Do you tire of manually checking dozens of sites every day for the information you need? Do you wish you could quickly whip up a bot without learning a new API and programming language?

Before last week, I had heard of Huginn, but not seen how it could fit into my workflow. Now that I have a better understanding of its features, allow me to explain the ways Huginn can improve the way you monitor important data.

At its core, Huginn is an event monitoring framework. Huginn operates with something called agents. These agents are analogous to a function in programming.

An agent will listen for a specific type of data (known as an event), preform some logic based operations with that data, and then emit another event with the processed data.

On their own, the agents have limited capability. The true power of Huginn is leveraged when we combine agents, into something called a scenario. A scenario is a tagged collection of agents, which usually communicate with each other to perform more complex tasks.

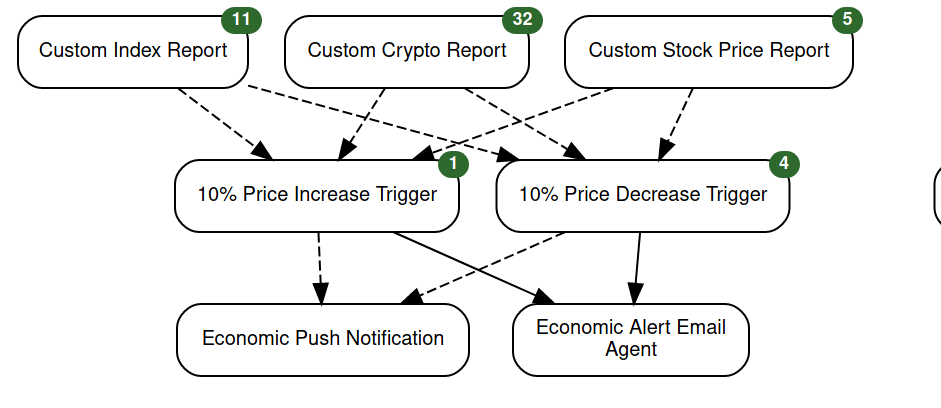

Let me go over an example scenario so you can get an idea of how agents interact

The top level shows three Website Agents. These scrape the sites of your choice and extract data from HTML, JSON, or XML. I have these website agents monitoring a JSON

feed of cryptocurrency, market indexes, and stocks.

Here is the JSON for the stock monitoring agent.

{

"expected_update_period_in_days": "2",

"url": "https://financialmodelingprep.com/api/v3/quote/AAPL,FB",

"type": "json",

"mode": "on_change",

"extract": {

"price": {

"path": "[*].price"

},

"symbol": {

"path": "[*].symbol"

},

"name": {

"path": "[*].name"

},

"changesPercentage": {

"path": "[*].changesPercentage"

}

}

}

These JSON feeds get sent to the middle layer of Trigger Agents. These agents take the incoming event (in this case JSON stock data) and evaluate it based on a value

comparison or regex.

{

"expected_receive_period_in_days": "2",

"keep_event": "false",

"rules": [

{

"type": "field>=value",

"value": "10.00",

"path": "changesPercentage"

}

],

"message": "{{name}} changed {{changesPercentage}}%. Current rate is {{price}}$.",

"must_match": "1"

}

I have them set to extract the changesPercentage field from the incoming event and re-emit an event if the symbol fluctuated +/- 10%.

When these trigger agents emit an event, it is a stock fluctuation I want to know about immediately. That is why these events are sent to two notification agents. One is an email, which I will see whenever I check it. I also send a push notification to myself so I can see the events as they happen.

What about Grafana?⌗

The way I see it, Huginn and Grafana fill very separate rolls in my workflow. Grafana (and the whole TIG stack) excel at monitoring lots of different data points over time and visualizing them beautifully. For example I could view how my hard drives are filling up over time, or which docker container is causing my CPU to throttle.

Huginn is great for on the fly creation of “bots” that will monitor very specific data points and perform actions based on them. Maybe I want an text whenever a specific model of CPU goes on sale. Or maybe I want an XKCD comic and a weather report delivered to me in a daily morning email. This is where Huginn shines.

However, I plan on experimenting with combining the two. What if I used Huginn to monitor and format a web data source, and send that nice formatted data to Grafana to display? I am still trying to figure out how this would work. I have attempted to use the JSON Datasource on Grafana, but I have not been able to get it working even once.

Does Grafana require you to store the data in some external DB? I could make the Huginn data go to InfluxDB, but it seems like it would be a lot of extra work.

Conclusion⌗

In my next post, I will go over installing Huginn using docker-compose and setting up the basic SMTP server which allows for email notifications. Then we will get into setting up some useful agents and getting used to the Huginn workflow.