Agents Standing By: Monitoring National Emergencies

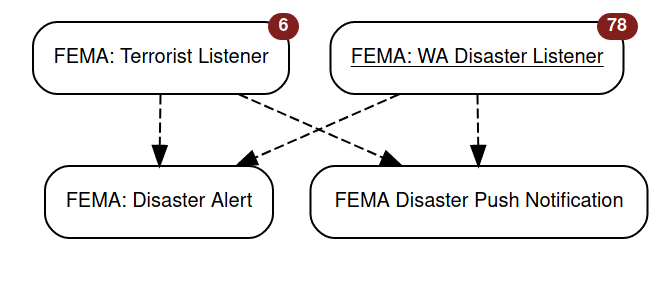

This scenario we will go over today will allow you to set up real time alerts as FEMA updates its online database of natural disasters and emergencies.

Firstly, we are going to need to set up a Website Agent. This will be similar to our previous agent, as it will extract the target data from a json data stream.

Let’s start by messing around with the API a little bit. Take a look at the full documentation if you have questions, but this should introduce you to the basics.

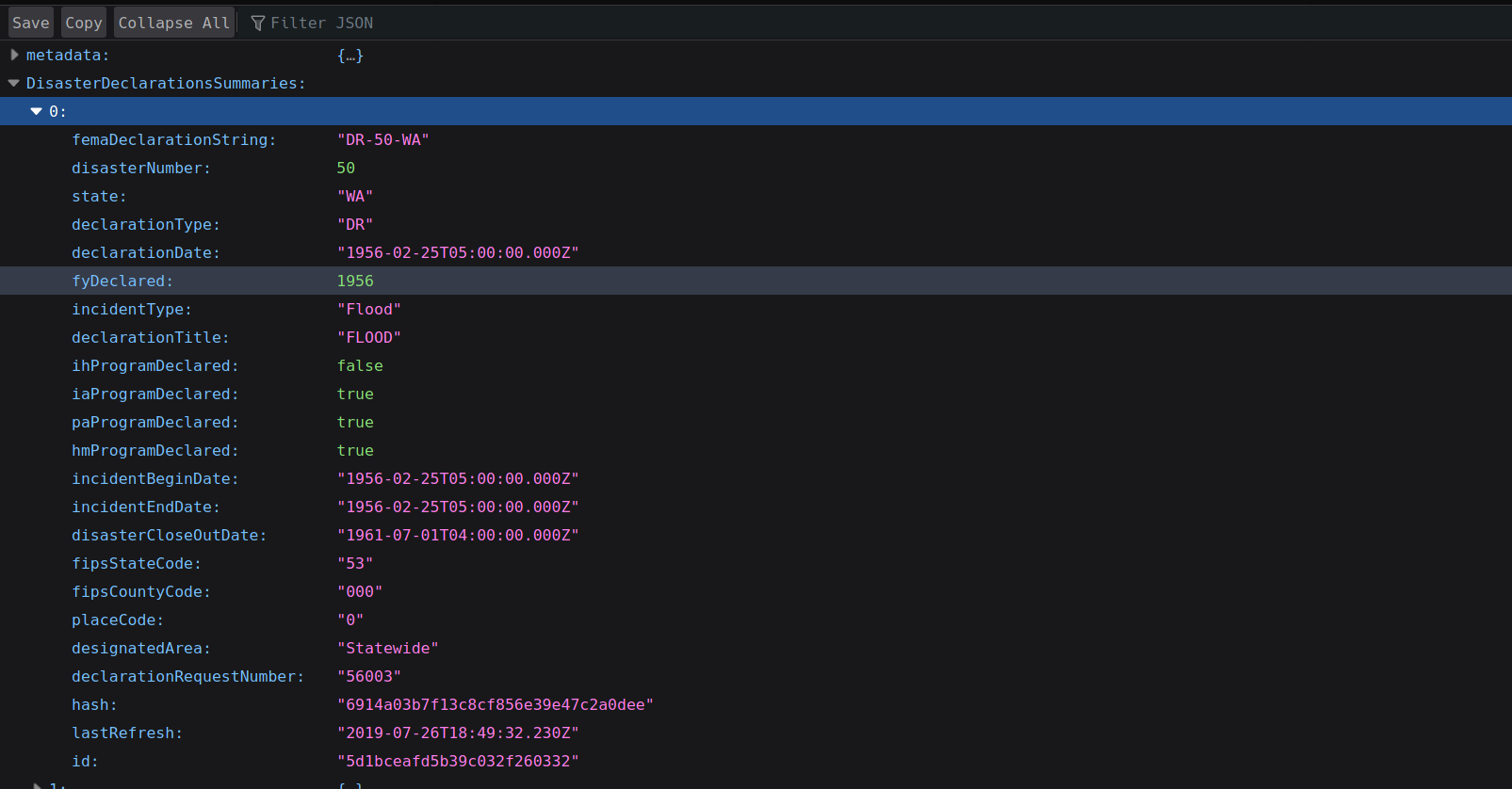

We can take a look at all the APIs offered by FEMA. Let’s select the Disaster Declarations Summaries - V2 dataset.

Under the Data Fields dropdown, we can see all of the various metrics returned for a disaster declaration. To view the data, we can visit the link under the API Endpoint dropdown.

This will display ALL disaster declarations. For our case, we may want to provide some filters to this data. Let’s narrow this down to our state shall we? For my cases, I will change the URL to the following

https://www.fema.gov/api/open/v2/DisasterDeclarationsSummaries?$filter=state eq ‘WA’

And then we can use a second URL to provide a list of all terrorist attacks nationwide;

https://www.fema.gov/api/open/v2/DisasterDeclarationsSummaries?$filter=incidentType eq ‘Terrorist’

We can create website agents to monitor any number of specific queries. We could narrow down the list arbitrarily, say to Floods in Oregon from 1980 onwards. Take full advantage of

the URI Commands section on the documentation, which details these filtering options.

The json for one of these agents is shown below

{

"expected_update_period_in_days": "2",

"url": "https://www.fema.gov/api/open/v2/DisasterDeclarationsSummaries?$filter=incidentType%20eq%20%27Terrorist%27",

"type": "json",

"mode": "on_change",

"extract": {

"declarationTitle": {

"path": "DisasterDeclarationsSummaries.[*].declarationTitle"

},

"state": {

"path": "DisasterDeclarationsSummaries.[*].state"

},

"declarationDate": {

"path": "DisasterDeclarationsSummaries.[*].declarationDate"

},

"incidentType": {

"path": "DisasterDeclarationsSummaries.[*].incidentType"

},

"designatedArea": {

"path": "DisasterDeclarationsSummaries.[*].designatedArea"

},

"placeCode": {

"path": "DisasterDeclarationsSummaries.[*].placeCode"

}

}

}

This will extract values from the data like placeCode, incidentType, and more which can all be used as variables to format your notifications.

In this scenario I have the notifications sent via email and push notification. I will show how you can format the email to correctly display information passed by the website agent.

{

"subject": "{{incidentType}} Alert!",

"expected_receive_period_in_days": "2",

"from": "Huginn@somedomain.com",

"body": "<h1>{{declarationTitle}}<\/h1><br>Alert! <br><ul><li>State: {{state}}<\/li><li>Date Declared: {{declarationDate}}<\/li><li>Area: {{designatedArea}}<\/li><li>ZIP: {{placeCode}}<\/li><\/ul>"

}

Now I certainly could have passed the original event through a Event Formatting agent to format the message before it hit the email agent. But I decided it would be easier to

simply write the formatting inline in this case.

Notice that I use HTML tags like <h1> and <br>. This is extremely useful for formatting emails (especially digests) where you might want a visual indicator of how information

is separated.

We also use liquid formatting to include variables passed by the incoming event, like {{declarationTitle}}. The end result of this scenario is an email with all the information,

for example;

COVID-19 PANDEMIC

Alert!

State: WA

Date Declared: 2020-03-22T16:21:00.000Z

Area: Chelan (County)

ZIP: 99007

NOTE!⌗

These listeners might appear to be Not Working according to Huginn. I believe this happens if the json source goes a long time without updating, or more days than allowed in expected_receive_period_in_days. Be assured the agent will function when the actual data source changes.

Conclusion⌗

Just by poking around the API documentation from FEMA, we are able to scrape tons of valuable data relating to events happening in the country. No longer be reliant on news reports, be the first to know.

This technique can be applied to countless open data sources and incorporated into your informational web!